Key Takeaways

- Tests of AI tools and “premade prompts” for UX analysis or CRO suggestions show Accuracy Rates of just 50–75% — or worse they have no documented Accuracy Rate at all

- Adopting 1 wrong UX suggestion can result in astronomical business costs

- You should always demand that AI tools for UX analysis and CRO document their Accuracy Rate

- At Baymard, we will not release any automated UX tooling with less than 95% Accuracy Rate

This article has been updated with new data from February 2026.

We’ve spent the past year building UX-Ray 2.0, which scans live ecommerce websites (or images of upcoming designs) and provides an instant heuristic evaluation of the site’s UX.

The evaluation is performed across 207 UX heuristics (Baymard guidelines) — all based on Baymard’s 200,000+ hours of large-scale UX research findings.

The unique part? UX-Ray has a documented 95% Accuracy Rate, when compared to how human expert UX auditors would have evaluated the same site.

In comparison, public tests of generative AI tools and “premade prompts” for UX analysis or Conversion Rate Optimization (CRO) suggestions show Accuracy Rates of just 50–75% — or worse they have no documented Accuracy Rate at all.

This matters because any LLM prompting or generative AI tool made for UX analysis or CRO suggestions, where the Accuracy Rate is either undocumented or below 95%, is simply not safe to apply to a commercial website.

The lost revenue from implementing just a few UX changes that hurt conversions is far greater than the savings from not ensuring changes are properly backed by UX research.

Which is why it’s reasonable that you demand that AI tools for UX analysis and CRO document their Accuracy Rate.

In this article, we’ll cover typical Accuracy Rates of generative AI tools, the astronomical business cost of adopting 1 wrong UX suggestion, why you should demand AI tools document their Accuracy Rate, and we’ll discuss how UX-Ray has achieved a 95+% human-level accuracy.

Generative AI UX Tools Typically Have an Accuracy Rate of 50–75%

2 years ago, we documented that ChatGPT 4.0 had an Accuracy Rate of just 20% when used for heuristic evaluations.

Since then, more research has come out regarding the Accuracy Rate of various generative AI tools for UX analysis or CRO suggestions.

Image credit: Microsoft UX researchers Jackie Ianni, Serena Hillman. March 2025. Bottom row is the error rate. The Accuracy Rate is the inverse of that number.

In March 2025, two Microsoft UX researchers adopted a similar study approach to see how far generative AI and LLMs have come in 2025 for conducting heuristic evaluations.

They found that the AI tools had Accuracy Rates of just 50%, 62%, 67%, and 75%.

But they also noted that a 75% Accuracy Rate was only achievable in one AI tool, and only when it was configured to dramatically reduce the number of UX opportunities it could identify.

Therefore, to achieve a 75% Accuracy Rate, the tool would miss 13 of 16 (i.e., 81%) UX opportunities that the human expert identified on the same webpage.

The Astronomical Business Costs of Adopting 1 Wrong UX Suggestion

It may seem that getting more high-performing UX suggestions — while also getting some UX suggestions that are harmful — is an okay trade-off.

Indeed, there’s an increasing number of generative AI tools for UX analysis appearing, along with an even bigger number of “premade UX/CRO audit prompts” being circulated on LinkedIn and similar places.

However, at Baymard we’d advise utmost caution against using LLMs and generative AI systems to perform UX analysis or generate CRO suggestions that are meant to be used to improve any commercial website.

Why? The business revenue lost from just 1 wrong UX suggestion implemented on your site is simply too astronomical.

Yes, an AI tool might give you 10 UX/CRO suggestions for your website. And yes, 5–7 of those suggestions are actually correct and good changes you should make.

The problem is that it also makes 3–5 suggestions that can be harmful to conversions — that you cannot tell apart from the good ones.

Thus, if all the suggestions are implemented, while the UX in some areas will improve, the UX in other areas will suffer.

Depending on the suggestions implemented, this can, at best, lead to “running in place” in terms of a site’s UX while having wasted resources on this effort, and at worst actively harm the site’s UX and reduce its conversion rate.

In short, the typical Accuracy Rates of 50–75% are simply too low to warrant using generative AI UX tools on commercial websites.

The Real Business Impact of 1 Bad UX Change

Even seemingly small UI changes, such as the way additional images are indicated in the image gallery, can make a big difference for both the ecommerce site and its users.

The crucial part that business managers and leaders need to understand about UX improvements and CRO is that even seemingly small UI changes can have a dramatic impact on website conversion rates and business revenue.

A small sliver of examples from UX audits that we performed for leading brands and Fortune 500 companies helps to illustrate the point:

-

A very large US retailer saw a 1% conversion rate increase after changing to use thumbnails instead of dots to indicate additional product images (example depicted above) (Baymard guideline #774)

-

A large luxury retail site saw an average 0.5% reduction in cart abandonment after changing to showing “estimated delivery dates” for each of the delivery options in their checkout flow (guideline #543)

-

A US sports retailer saw a $10 million increase in annual sales just from duplicating the “Place Order” button from the bottom of the “Review Order” step to the top (guideline #556)

-

A top-3 US airline had a 90+% mobile web abandonment rate in their booking flow where they used asterisks to indicate optional form fields (guideline #686)

The web is full of A/B case studies showing similar examples, where seemingly small UI changes cause massive financial impact.

The point of the above examples: UX changes that can seem like small UI details often have a massive financial impact.

That’s a challenge, because it makes it very hard to discern good UX from bad UX just by looking at the UI. That is true, both for humans and for AI.

Consequently, a single wrong UX implementation can have astronomical costs for a business due to lost online revenue.

On top of this comes the internal design and engineering costs wasted on a poor redesign and the opportunity cost of falling behind your competition.

In short: When compared to the high business costs of implementing a poorly performing UX suggestion, the costs of enhancing website decisions with properly verified UX research are marginal.

But Can’t We Just Stop These AI Mistakes Before They Go Into Our Site?

Spotting and correcting those 3–5 harmful UX/CRO change suggestions, that the AI tool interspersed among the 5–7 good UX change suggestions, would require either:

1. That you A/B split-test all the AI-generated website changes that contain critical conversion mistakes, leading to significant revenue lost for that traffic cohort.

Meaning that half of your A/B web traffic is now experiencing a serious conversion hit in addition to a significant reduction in the actual time to ship. (In comparison, when doing a well-planned A/B test, a UX/CRO team would never ship a “treatment” design that has known conversion mistakes built into the design.)

2. That you use an experienced UX/CRO expert to quality assure the AI-generated UX changes, reducing any speed efficiency of using AI-based UX/CRO analysis.

Telling good UX from bad requires a detailed in-depth walkthrough.

In reality, the expert will spend as much time reviewing AI suggestions as they would have taken to audit the original site to begin with.

Both of the above options offset the benefits of trying to use the AI tool in the first place.

Be Very Cautious When Considering Relying Solely on LLMs or Generative Models for UX

At Baymard, along with many authoritative voices in the UX research space (incl. NNGroup), we advise against using LLMs or generative AI tools as the main source for analyzing or determining what UX changes you should make to your website.

The accuracy of such methods is either too low (below 95%) or simply unknown, making them unsuitable for any commercial website.

Don’t get me wrong — at Baymard, we are big believers in the efficiency and speed increases generative AI and LLMs can generally bring.

However, we also believe in the critical importance of not providing inaccurate UX advice — which is why we think generative AI UX tools work best when used for a defined set of rule-based checks, rather than offering open-ended advice.

In the end, it’s counterproductive to try to save costs and increase speed to ship if that has a real risk of leading to a higher degree of poor-performing UI/UX changes slipping into your production website designs.

Demand AI Tools for UX/CRO Publish Their Accuracy Rate

If you intend to use any AI tool that promises UX analysis or CRO suggestions, then it is reasonable for you to ask to see the documentation for the AI tool’s Accuracy Rate.

The Accuracy Rate documentation should also be based on testing a broad range of websites (20+), not just a few cherry-picked websites.

Any Accuracy Rate that is either undocumented or below 95% simply won’t be “safe for work” on commercial websites where revenue is on the line.

The short-term efficiency gains in one specific department (UX/CRO) will be squandered by the loss of online revenue, if implementing just a few poor UX change suggestions.

UX-Ray 2.0’s Accuracy Rate Is 95% (Incl. Test Data)

At Baymard, we decisively will not release any automated UX tooling with a low or undocumented Accuracy Rate.

Before we release any of our 700+ research-based UX heuristics to UX-Ray, we first ensure it has achieved a documented 95+% Accuracy Rate.

This Accuracy Rate is measured by comparing to the Accuracy Rate of human UX experts — in this case Baymard’s highly specialized UX audit team, normally auditing Fortune 500 websites and the world’s leading brands.

The results of UX-Ray and human auditors are then manually compared line-by-line.

Only when the 95% accuracy is repeatable across a large number of websites will a heuristic be added to UX-Ray.

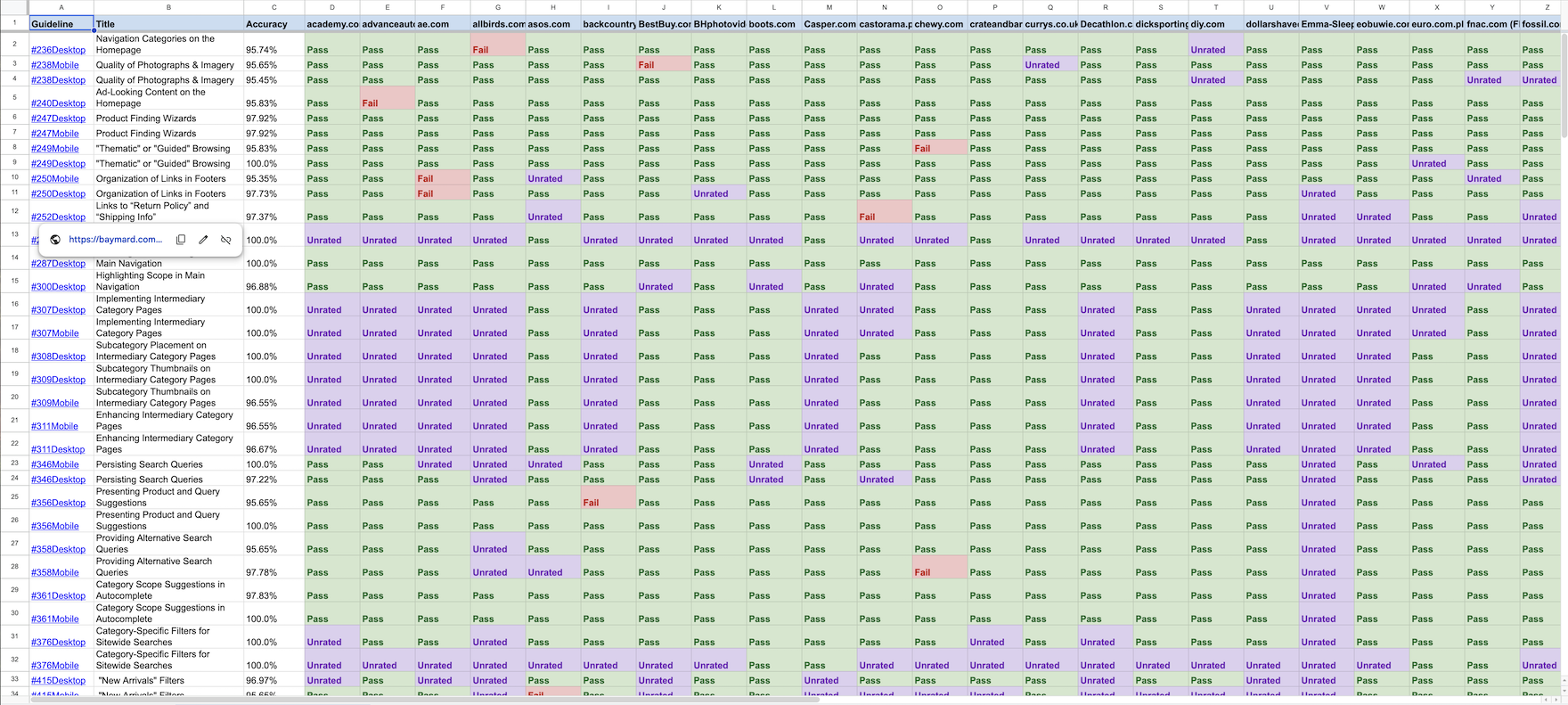

With that in mind, here are our results as of Feb, 2026:

-

UX-Ray can perform an automated heuristic evaluation using 207 UX heuristics with a 95% Accuracy Rate or higher, based on either live URLs or screenshots of websites or prototypes.

-

The 207 UX heuristics are research-backed, as they are all based on Baymard’s 200,000+ hours of large-scale UX ecommerce UX research.

-

The 95% Accuracy Rate is calculated by performing a human-conducted UX heuristic evaluation across 48 different websites, where UX-Ray and Baymard’s UX auditors assessed the same websites, using the exact same set of screenshots.

-

The 48 test sites were selected to include a mix of large and medium-sized stores, across the US, UK, and Europe, and span across common industries like apparel, groceries, and electronics. 10 of the sites were not in English but were in German, French, Polish, Italian, Dutch, and Spanish. See the appendix with site screenshots.

The Raw Test Data for UX-Ray 2.0 Accuracy Rate

See the raw line-by-line comparison of the 48 sites across the 207 heuristics that achieved a 95+% Accuracy Rate.

- See the raw line-by-line Accuracy Rate results. (Feb 2026, UX-Ray version 2.0)

- Download all the page screenshots of the 48 websites that were assessed by both UX-Ray and our team of human experts

This Accuracy Rate test setup costs beyond $100,000 to complete, but we believe it’s well worth the investment.

95% Accuracy Is Only Possible Due to 7 Years of Work to Increase Human Accuracy

UX-Ray is only possible because we have built a proprietary heuristic evaluation tool for human experts. We’ve spent 7 years manually mapping 8,000+ common UI components to specific heuristic evaluation outcomes.

As most others, we’ve found that LLMs and generative-AI based tools are excellent for tasks like:

-

Creating a diverse set of results where there are no right or wrong answers (i.e., creative tasks)

-

Delivering fast, synthesized answers where the tool follows a simple fact pattern (i.e., search 1,000 pages of documents for the presence of a specific type of answer)

But for generating complex UX analyses and CRO suggestions, we’ve consistently found that, while many of the generative AI tools can indeed generate a subset of good answers and results (if very detailed prompting is used), they also consistently make suggestions that are harmful to conversions, and the large-scale Accuracy Rate is not high enough for commercial usage (below 95% accuracy).

So, how have we built UX-Ray?

UX-Ray does not use an LLM or generative-AI-based approach to generate any of the UX analysis, UX reasoning, or UX thinking.

Moreover, UX-Ray never uses an LLM or generative-AI approach to even describe any UX opportunities or conversion improvements.

Instead, UX-Ray uses a cascade of more than 15 separate systems, where the majority are not generative AI-based.

Each of the systems solves for a very specific part of the overall heuristic-evaluation process.

In UX-Ray, LLMs and generative AI are only used to classify which UI pattern is chosen for a specific UI component (e.g., “Here’s a screenshot of filters. Does the filtering values styling use checkboxes, link styling, and/or normal text styling?”).

This AI usage is narrow in scope, and the AI systems’ response options are predefined by our human UX experts and are very limited in number; often, only 2–10 answer options are available.

Importantly, in UX-Ray generative AI is not used to determine if any of those answers are good or bad.

And when improvements are possible, generative AI is also not used to determine what the improvement should be.

The improvement suggestions are solely based on our 200,000+ hours of large-scale UX research findings.

The upside: Our approach provides a very high Accuracy Rate compared to any approach that uses any generative AI to provide the actual UX analysis or improvement suggestions.

The downside: Our approach is extremely labor-intensive.

UX-Ray is only possible because we have built a proprietary heuristic-evaluation tool for human experts, where we, over the past 7 years, have been manually mapping 8,000+ common UI components to specific heuristic-evaluation outcomes across our 700+ UX heuristics (guidelines).

(Ironically, we started building this heuristic-evaluation tool because we wanted to increase the human interrater reliability and intrarater reliability rates for our UX audit service.)

“Failing Safely” Matters

Beyond achieving a 95% Accuracy Rate, another advantage of UX-Ray not relying on LLMs and generative AI for making the actual UX analysis or describing UX opportunities is that in the 5% of cases where UX-Ray is not accurate, it fails in a more “work-safe” way.

Let’s use the screenshot below to illustrate what we mean:

When generative AI is wrong in UX and CRO recommendations, it’s often because it generates a wrong solution or the UX reasoning itself is wrong.

So in the 25–50% of the time generative AI is wrong, it could recommend a change like:

“Simplify your image gallery layout by making additional images appear as dots instead of thumbnails. That simplifies your UI by taking up less space and is more calm to look at, which is generally considered good UX.”

As exemplified earlier in this article, such a UX design mistake made a large US retail client of ours miss out on a 1% increase in site conversion rate.

When UX-Ray is wrong, it can only fail in the UI style detection itself.

That’s because it only uses LLMs and generative AI to detect the style of a UI component, and never to conclude if that detected style is then good or bad.

So for the above image, in the 5% of the times where UX-Ray is wrong, it would say:

“In your image gallery, you use indicator dots instead of image thumbnails.”

However, this false opportunity is suggested for a webpage that is clearly already using image thumbnails instead of indicator dots.

Anyone, including non-UXers, would instantly be able to spot this as an erroneously identified UX opportunity before proceeding.

This makes UX-Ray quite hallucination-safe, because the UX opportunities identified are not LLM-based or AI-generated.

Ensure Generative AI Supports Rather Than Hinders Your UX

The growth of generative AI has been exciting and impressive to observe.

But if you intend to use any AI tool that promises UX analysis or CRO suggestions, then it is reasonable for you to first ask to see the documentation for the AI tool’s Accuracy Rate — which is why we provide it here in this article for UX-Ray.

Any Accuracy Rate that is either undocumented or below 95% simply won’t be “safe for work” on a commercial website with actual revenue.

Sure, humans aren’t foolproof — even expert UXers with decades of experience will still misread UX issues or provide an analysis that’s lacking context or some UX details.

Yet human experts will almost never suggest changes that are so wrong that they actually harm the conversion rate, especially if they are only basing their heuristic evaluation on large-scale UX research.

With UX-Ray, we’ve decided to only include those heuristics that get an Accuracy Rate of at least 95%.

Going forward, we’ll add more of our 700+ heuristics into UX-Ray — but only as we can document UX-Ray’s ability to accurately diagnose issues, and do it consistently.

If you want to try out UX-Ray you can do so on our Free plan, and get the first 2 UX suggestions for your ecommerce site for free.