What is Split Testing? 6 Split Test Ideas for E-Commerce

One of the best ways to boost conversion rates in e-commerce is to optimize your site using split testing.

However, random tests like changing the color of a buy button rarely provide enough insight to make meaningful UX design changes.

Keep in mind:

Split testing only results in substantial improvements when you take a data-oriented approach to solving a clearly defined problem.

In this article, we’ll explain what split testing is and how to do it effectively. We’ll also share some split-test ideas for e-commerce sites to help you test the changes most likely to improve conversions.

What Is Split Testing?

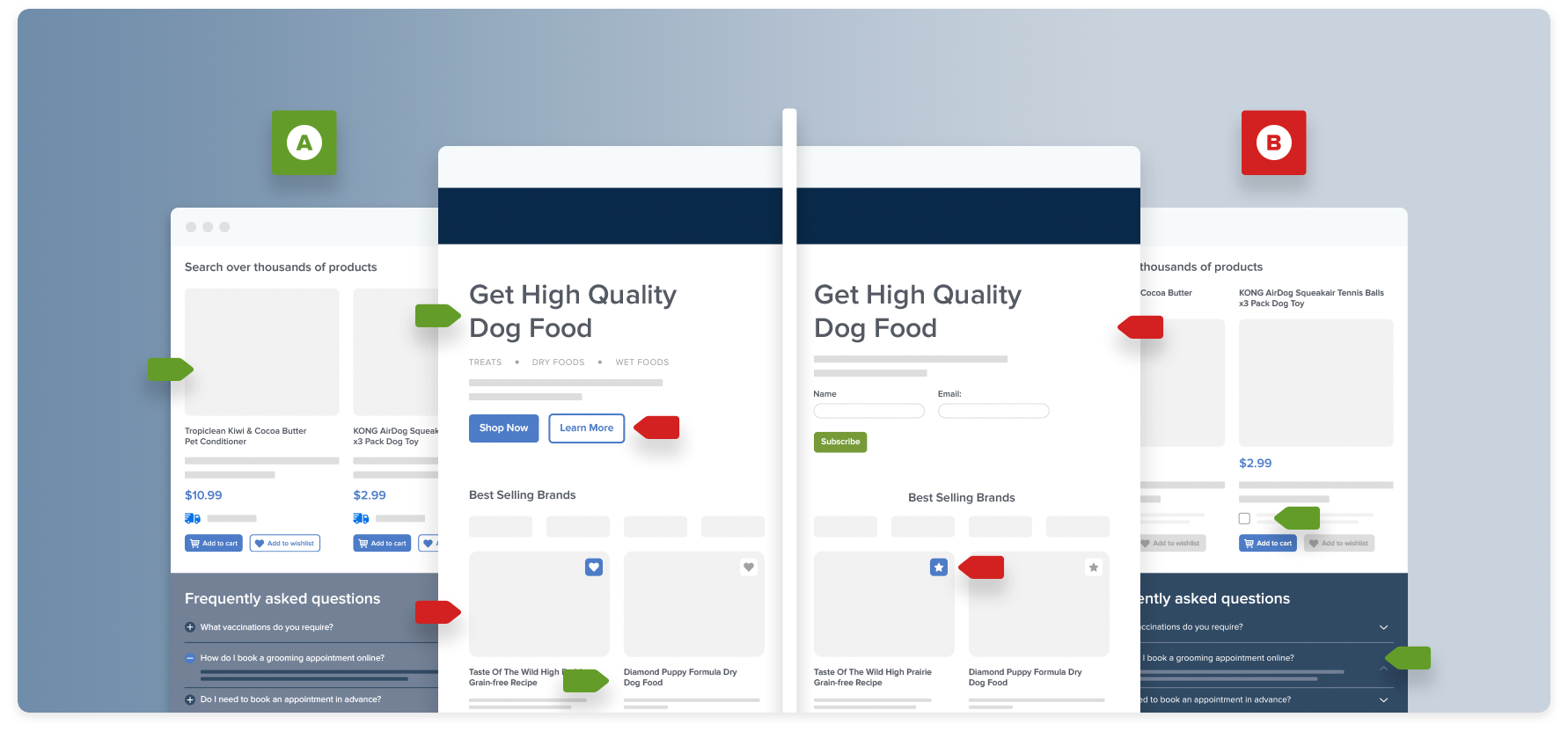

Split testing, or A/B testing, is the process of comparing two different versions of a web page to determine which one is most effective at converting visitors into customers.

The original page is called the “control”, and the new page you’re testing is the “variation”.

Ideally, there is only one small difference between the control and the variation for each split test so that you can understand the true reason behind the difference in performance.

When launching your split test, the traffic to the pages you’re testing is spread randomly between the two versions. You track the performance of each page and analyze it with website split testing software to identify the preferred version.

Why Should You Conduct a Split Test?

If you suspect that an element or aspect of your website isn’t working — or that you could make improvements to get better results — split testing can be a highly effective way to increase conversions and bring in revenue.

Split testing a design change has many other potential benefits, including:

- Learning how customers respond to changes in UX or UI

- Eliminating uncertainty and guesswork in the design process

- Establishing an iterative UX process of conversion rate optimization (CRO)

- Gaining unexpected insights into a customer’s wants and needs as they travel through a site

- Creating better and more effective designs and copy

- Maximizing traffic and visitor engagement

- Reducing risk by testing before committing to substantial changes

The goal of split testing is to find out what works best for your specific website and your audience. Don’t try to copy other sites, even if they are industry leaders.

What works for other sites — even major e-commerce sites like Amazon — may not work for your website due to a number of factors like differences in business goals, traffic sources, and customer expectations.

Stay focused on finding out what works for your customers instead of following someone else’s designs and implementations.

Split Test Ideas for E-Commerce

Looking for ideas on what to split test on your site? Here are a few examples of elements to consider testing on your site.

Each of these changes are pre-validated by in-depth UX research into more than 100 leading e-commerce sites. But to make sure they’re right for your site and your customers, put them to the test.

Is CAPTCHA Necessary?

For most visitors, a CAPTCHA can be difficult to decipher. It often adds unnecessary friction to the sign-up process. Create a split test to see if conversion rates increase when you eliminate the CAPTCHA from your sign-up process.

You can also compare this data to the number of spam accounts that are created when you eliminate the CAPTCHA, so you can make an informed decision about whether this extra step is worth it.

If your website needs a CAPTCHA, take a peek at these six CAPTCHA usability tips.

Will a One-Page Checkout Work Better Than Multi-Step?

Some one-page checkouts perform better than their multi-page counterparts — but often, the tests that lead to these results are comparing apples to oranges. For instance, if you test a new, optimized one-page checkout, it will certainly convert better than a non-optimized, multi-step checkout.

Test both types of checkouts on your site — one-page and multi-page — during your split testing, ensuring that both processes are fully-optimized before running the test.

Which Elements Increase Perceived Security During the Checkout Flow?

In our extensive usability testing, we found that the average user’s perception of a site’s security is largely determined by their “gut feeling”, which is tied directly to how visually secure a particular page looks.

Split test checkout pages that include visual clues like padlock icons, site seals in close proximity to credit card fields, and other visual reinforcements to see how each element affects conversions.

→ Sign up free to free research-backed UX guidelines and start improving your site today.

Is Your Live Chat Feature Implementation Too Intrusive?

Live chat features can be helpful when users are looking for a quick answer. However, they are frequently a source of frustration for users, popping up to disrupt and distract visitors.

During our testing, the most common live chat approaches – overlay dialogs, dialog pop-ups, and sticky/floating chat elements – each caused significant UX issues.

Consider testing whether your live chat implementation is causing visitors to leave the site.

Will a Static Free Shipping Threshold Nudge Users Into Qualifying?

Are your users clear about the spending requirements to receive free shipping?

Perhaps your e-commerce site offers free shipping on orders above a certain amount. There are typically two different ways of displaying the free shipping tier to users: a static threshold (“Free shipping for all orders over $50”) or a dynamic difference (“Spend $25.73 more to qualify for free shipping”).

If you use a free shipping threshold, split test displaying a dynamic difference the user needs to satisfy to qualify — or vice versa.

Will a Prominent Guest Checkout Option Improve Conversions?

Many sites require users to create an account before checking out. While this might seem logical, a forced account registration requirement is a direct cause of checkout abandonment.

Despite its importance to checkout UX, the guest option is frequently hidden, leading visitors to miss it and leave the site. Split test offering guest checkout as the most prominent option at the account selection step to see if more users complete a purchase.

How to Conduct a Split Test

We’ve already established that split testing is a good idea for improving conversions and examined some examples of things you can test out in your usability and conversion “laboratory”.

Now, what are the steps for completing your first split test and for making split testing a regular part of your development process?

1. Identifying the Problem

Think carefully before altering pages by popping in new images or reworking your checkout process.

What problem are you trying to solve?

All too often, people are so excited about the world of A/B testing that they skip over this step. They say:

“I saw an example of a site that changed the color of their ‘Buy’ button and got a (insert wildly high number here)% increase in sales! But when I implemented the same change on my site, it didn’t work — the new button actually performed worse than my original!”

The problem with this approach is that the e-commerce site developer didn’t have a clearly defined problem before they began testing. If the new version of the button didn’t increase conversions, they would have learned nothing. If it did boost conversions, they wouldn’t know exactly why it worked.

Hone in on one problem

Instead of testing random ideas (or testing changes that you heard worked for other sites), take the time to decide on the problem you want to solve. This might be a high abandonment rate during your checkout process, a high bounce rate on a landing page, or another issue.

This step will give you a clear idea of the problem your new designs should solve, as well as your criteria for success. Did more users complete the second step of the checkout? Did the bounce rate decrease during your landing page split test?

2. Defining Your Hypothesis

A “hypothesis” is a proposed explanation based on limited evidence as a starting point for further investigation.

Create a hypothesis about why you believe your problem is occurring. Examples of good hypotheses include “Our customers leave because they don’t immediately understand the product’s features'', or “Our customers don’t trust the reviews on our product pages”.

Rely on data to help you define your hypothesis. Google Analytics (or your preferred analytics program) will display exactly what your bounce rate is for landing pages and at which point users are abandoning the checkout process. Use this information to decide what to test and what your hypothesis will be.

For example, one possible hypothesis might be:

After observing user behavior during our checkout process, we see that customers often abandon their carts during step two. We believe that moving to a one-step checkout process will lead to more conversions.

Through split testing, you’ll be able to prove or disprove your hypothesis.

3. Identifying Sample Size

To run a successful test, you need to drive a lot of traffic to your page versions. That means you need to run your test long enough and have a high enough sample size to get reliable results.

You will need to calculate the right sample size for what you intend to test, but in general: If you don’t get a lot of visitors to your site, you will need to run your split test longer to get statistically significant results.

If you’re using a split-testing or CRO optimization platform, the tool will often have a calculator that displays the reliability of your test data based on visitor numbers and conversions.

4. Creating Your Variation

With your hypothesis and sample size figured out, it’s time to come up with different variations you can test. These will be real variations, not just trivial changes based on rumors about results on other sites.

If you need help figuring out what specific alterations to make on your variation pages, Baymard Premium provides numerous ideas. The UX recommendations in Baymard Premium are pre-validated by 71,000+ hours of usability testing.

Make the change you’ve decided upon and create two versions of your page, remembering to only test one variable at a time.

Control your test

To avoid skewing results, eliminate any outside factors that could unduly affect the conversion rate of your page (traffic source, referring ad, time of day, etc.). Brainstorm all possible external factors, and keep as much as possible stable in your split test to get reliable results.

Address as many testing obstacles as possible before beginning your test, but always keep an eye out — obstacles to accurate results can pop up at any time during your test.

5. Testing Your Campaign

This is the “quality assurance” phase, where you test with a small sample to make sure your split versions are showing as expected.

Check every aspect of your campaign to make sure nothing threatens the accuracy of your results. Are all of your call-to-action buttons working? Does your post-click landing page look the same in every browser? Are your ad links working correctly?

6. Driving Traffic to Your Pages

As noted above, make sure all of your traffic comes from the same place for the entirety of the test.

Keep running your test until you’ve hit the sample size identified in step three, or the amount of traffic your split testing software requests.

If you hit a statistically significant number in less than a week, keep running the test for a few additional days. Specific days of the week have a significant impact on conversions, as there are some when visitors are more likely to buy.

7. Analyzing and Optimizing

Once you’ve run your test for at least a week and hit your sample size, analyze your results and identify which variation produced the best results of your split test.

A/B testing software will show how each of your pages performed. If your variation is the “winner”, apply what you've learned from the experiment to other relevant pages on your website. If your variation didn’t win, don’t worry, use your split test as a learning experience, and generate a new hypothesis.

The list of things you can experiment with during split tests is limitless, so keep iterating and testing all aspects of your site to improve your conversion rate and boost revenue.

Refine Your Split Test Ideas with UX Research

With Baymard Premium, you get access to over 500 recommendations for improving UX performance – research-backed ideas you can use to conduct your own split tests. Once you’ve determined the goal of your tests, you can use the UX guidelines and page design examples to establish a hypothesis and decide exactly what changes to test as you optimize your site.

Find out how the world’s leading e-commerce companies use Baymard Premium to test design changes and create high-performing sites.

Research Director and Co-Founder

Christian is the research director and co-founder of Baymard. Christian oversees all UX research activities at Baymard. His areas of specialization within ecommerce UX are: Checkout, Form Field, Search, Mobile web, and Product Listings. Christian is also an avid speaker at UX and CRO conferences.