Key Takeaways

- Some users have difficulty gauging the overall “fit” in reviews

- Users unable to find useful “fit” info in reviews risk selecting an incorrect size or discarding suitable items

- Yet 33% of sites fail to aggregate “fit” info provided in individual reviews

Video Summary

In Baymard’s large-scale UX testing of Apparel & Accessories, users were observed to predominantly turn to reviews in order to determine how an apparel item fits — for example, whether a pair of pants or a top fits “too small”, “too large”, or “true to size”.

However, several participants in testing had difficulty finding the “fit” info in the user reviews, leading them to incorrectly assess an item’s overall “fit”.

As a result, without a clear way to identify and assess relevant “fit” info in reviews, some users might select the wrong size — or even pass on purchasing an item.

To enable users to efficiently pinpoint an item’s fit, apparel and accessories sites should include an aggregate “fit” subscore in the reviews section.

However, our e-commerce UX benchmark shows that 33% of sites don’t aggregate any “fit” info provided in individual reviews, leading users to — often inaccurately — try to interpret the aggregate score themselves.

This article will discuss our latest Premium research findings on how to present “fit” info in the user reviews:

-

Why users have difficulty finding useful “fit” info

-

How providing an aggregate “fit” subscore helps users efficiently determine an item’s overall fit

-

How providing “fit” info as a structured component for individual reviews helps corroborate the aggregate subscore

Why Users Have Difficulty Finding Useful “Fit” Info

“I’m someone that hates wearing a belt, so I look for jeans that fit at the waist.” Because “fit” info wasn’t evident in the reviews for a pair of jeans (first image), and not all of the 13 reviews mentioned fit, a participant at Madewell had to closely read each review to glean any “fit” info (second image): “I like these, but I did see someone that said that they sized down. So I don’t want it to be too big.” Yet, still unsure about fit after reading all the reviews, she turned next to the size guide.

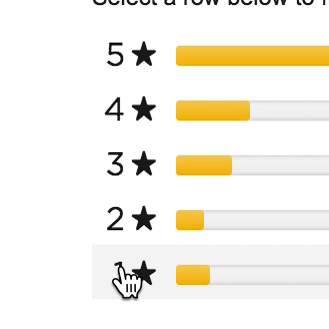

“They said the ‘Quality’, the ‘Style’, and the Value’…were great. They didn’t really say if it was true to size or whatever, but I’m assuming [it was].” A different participant at Madewell also turned to the reviews to find out about the fit of a pair of jeans. She first checked the subscore ratings chart, which didn’t include “fit” information (first image). With no specific “fit” info highlighted in the reviews either, she tried to glean “fit” info from the text and assumed after reading only 3 of 5 reviews — only 2 of which mentioned fit (second image) — that the jeans were true to size, adding them to her cart in her go-to size.

During testing, several participants who approached the reviews to discover the fit of an apparel item were not able to easily pinpoint any “fit” info provided by reviewers.

Some participants therefore attempted to read every review in granular detail in an effort to draw out any “fit” info within.

Even more problematically, participants had to then mentally gauge the overall consensus about “fit” across all the reviews in order to apply the feedback to their size-selection process — a task difficult to accurately perform on the fly.

In particular, in cases where an item had a large number of reviews — one item had 2,155 — participants couldn’t realistically read every review and therefore based their findings about fit on the handful of reviews that they were able to read.

As a result, given that they based their findings on a subset rather than the entirety of reviews, some participants risked incorrectly gauging the overall fit of items.

“I’m going to look at the reviews and see what people are saying.” A participant at UNIQLO had to mentally summarize the individual “fit” subscores (first image; note the “How it fits” text beneath the individual star ratings) for 14 reviews about a pair of jeans in order to gain a clear idea of users’ overall “fit” recommendation (second image): “This person’s saying…They fit too big. This person’s [also] saying they fit too big. ‘True to size’, ‘true to size’, ‘true to size’…. Person saying they fit small. So, the majority of people are saying they fit true to size, so I would just get my regular size.”

“I like to read usually if this item is true to size. So sometimes certain brands, shoes will be one size smaller on me, and then other brands, shoes will be one size bigger. So I think it really matters…I want to hear it from a customer that’s tried it that says it is true to size.” A participant at Hush Puppies wanting to know the fit of a pair of boots first lingered for 15 seconds at the top of the reviews, where she only found bar charts for “Comfort”, “Style”, and “Quality” (first image). She then proceeded to carefully examine the “fit” info within the reviews — reading each reviewer’s narrative as well as looking at the “Size fit” text beneath each blurb (second image). After reading 6 (out of 20) reviews, she came to the conclusion that the overall fit was “true to size”, even though some of the reviews she looked at recommended different fits (third image).

During testing, some sites did call out “fit” information in individual reviews; for example, including “Fit: true to size / runs large / runs small” as a structured component to each individual review.

However, this forces users intent on knowing the aggregate “fit” subscore for an item to mentally tally up each individual subscore on an invisible continuum from “too small” to “too big”.

By relying on their overall impression of the individual “fit” subscores (not all of which users will bother to read) — rather than on actual calculations — users’ interpretation of the aggregate score will frequently be inaccurate.

How Providing an Aggregate “Fit” Subscore Helps Users Efficiently Determine an Item’s Overall Fit

“I like when they sum it up right here without having to look through all the reviews…This ‘Size’ meter with ‘true to size’ looks like it’s leaning towards ‘Runs Large’, so that’s very important. I think I would order maybe a half size down. Then the ‘Fit: ‘Runs Narrow,’ okay, so I would probably pick a wider size.” This participant at Cole Haan was impressed that “Size” and “Fit” subscore summaries were included at the top of the reviews for a pair of boots, using them to determine that she needed to get a size that was both smaller and wider than her typical size.

“I am very familiar with Adidas…I do know my size.” A participant at Adidas first selected her size “S” for a tank top (first image) and next read the reviews, stopping first to take note of the aggregate “fit” subscore (second image): “The fit is a little loose, so I’m happy that I’m going with a small.” She then read through a few individual reviews to corroborate the overall “fit” recommendation (third image): “Yeah, running ‘a little loose’. So that makes me feel more confident in my decision.”

“[‘Fit’], that’s like right in the middle. I like that they have this, they ask if it ‘Runs Small’ versus ‘Large’. ‘Quality’, like yeah I care, but I’m more interested in the ‘Fit’”. A different participant at Columbia Sportswear, interested in a pullover, was glad that “fit” was included in the aggregate subscores at the top of the reviews.

“I always like to see if they have any reviews on it…It seems like it’s true to size.” A participant at Express who consulted the reviews for a blazer homed in on the ‘fit’ aggregate subscore (first image), taking into account the “true to size” rating in her decision to add her regular size to her cart (second image).

Therefore, to provide an overview of an apparel product’s fit, it’s important to include an aggregate “fit” subscore in the reviews section.

As one participant indicated, the aggregate “fit” subscore allows one to understand an item’s overall fit at a glance: “Well, I usually would read the reviews if the site didn’t have the thing [aggregate “fit” subscore] that basically said that the customer said it was true to size. So I would usually read the reviews to find out whether they thought they fit true to size.”

An aggregate “fit” subscore saves users the time and effort of having to scan individual reviews for this information — an insurmountable task if there are hundreds of reviews.

During testing, an aggregate subscore represented by a bar chart scale that was rated from “too small” to “too large” (or similar terminology) performed well.

How Providing “Fit” Info as a Structured Component for Individual Reviews Helps Corroborate the Aggregate Subscore

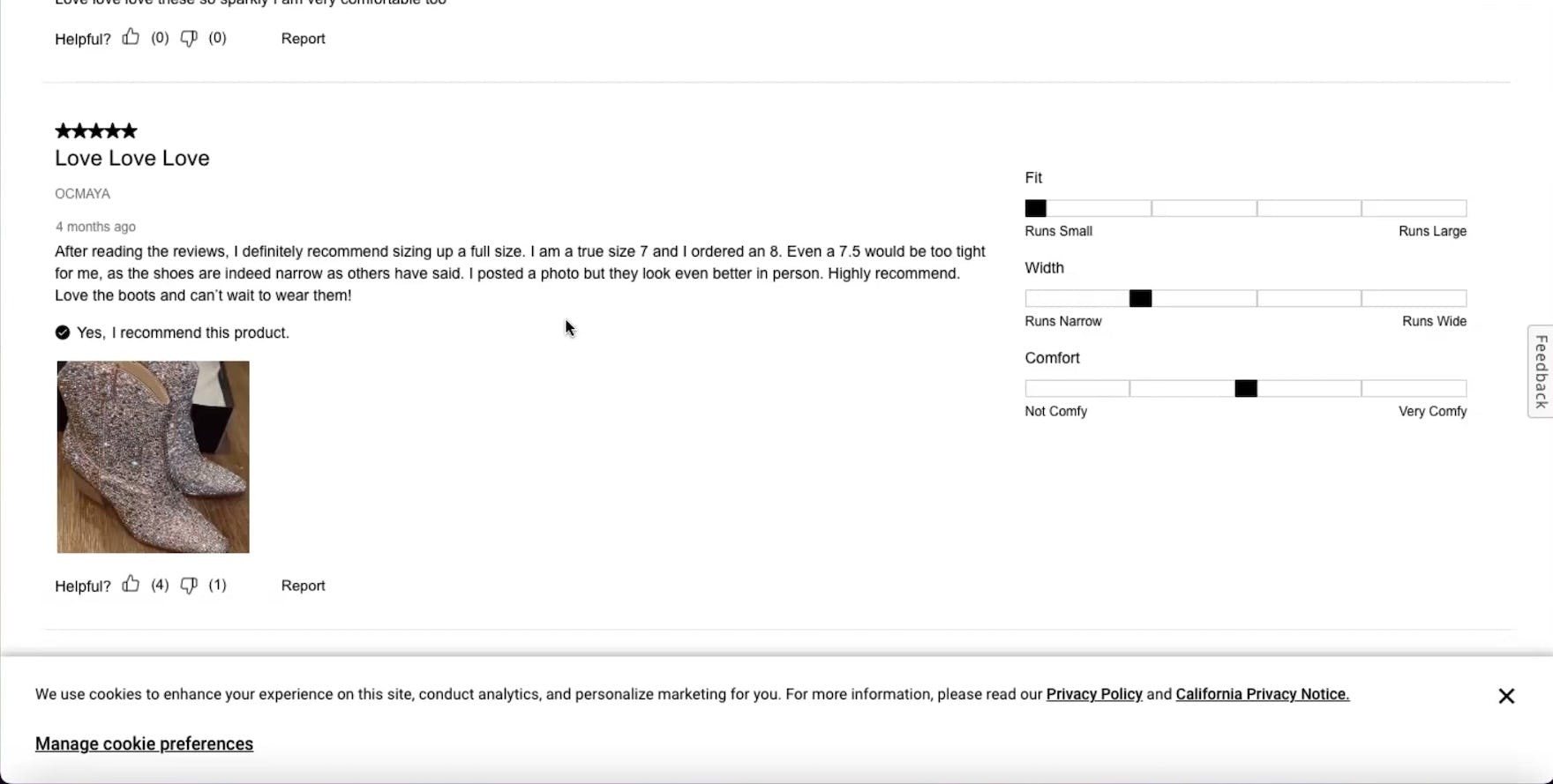

“Okay so these are cute, these are hip!…So for this I would definitely go down to the reviews and see what people are saying…And then it looks like people are saying these run small, so I would probably get it one size up.” At DSW, a participant concerned about the fit of a pair of boots headed to the reviews and paused on the top aggregate subscores to take into account customers’ overall feedback about fit. She then read the individual reviews, deriving confidence from the fact that both the reviewers’ blurb text as well as “Fit” subscores corroborated the aggregate “Fit” subscore’s rating, which skewed to “Runs Small”: “And this person’s saying ‘[they recommend] sizing up a full size.’”

While it might seem redundant to provide both individual “fit” subscores in addition to an aggregate “fit” subscore, testing showed that participants differed in how they liked to digest information provided in reviews.

At one extreme some wanted to quickly get to the point about “fit”, while at the other extreme other participants enjoyed the granular aspect of “researching” all the reviews by reading through each one.

Moreover, users who take note of an aggregate “fit” subscore might still want more context around why specific reviewers rated the fit of an item the way they did.

Without an aggregate “fit” subscore, users will take longer to figure out the fit of an item.

Likewise, only providing the high-level aggregate “fit” subscore will sit uneasily with some users, who will wonder on what evidence the site relied to calculate their score.

Providing the “fit” subscores as a structured component in individual reviews as a means to corroborate the aggregate subscore provides a level of transparency around the reviews that users will appreciate — thus also boosting their trust in the site.

Notably, consider also implementing these review-specific “fit” ratings as bar charts, since text summaries may be overlooked by users.

Help Apparel Users Quickly Determine an Item’s Overall Fit from the User Reviews

“So let’s see what people are saying”. Without any “fit” aggregate subscores — let alone any subscores at all — a participant looking for a polo at Puma tried (and failed) to read each of the 21 individual reviews to gain consensus about the fit and quality of the top.

As our research has shown, arriving at a useful consensus regarding the feedback about fit in reviews can be a difficult task for users.

By including an aggregate “fit” subscore in the reviews section — and providing individual “fit” subscores in addition — sites can help users efficiently select their correct size.

However, when reviews make it difficult to determine the overall fit at a glance, users are burdened with the unrealistic task of calculating the overall “fit” score on their own.

Yet 33% of sites don’t allow users to easily understand an item’s overall fit — risking that users incorrectly gauge the fit and select the wrong size.

Getting access: all 500+ Apparel & Accessories UX guidelines are available today via Baymard Premium access. (If you already have an account open the Apparel & Accessories study.)

If you want to know how your apparel or accessories desktop site, mobile site, or app performs and compares, then learn more about getting Baymard to conduct an Apparel & Accessories UX Audit of your site or app.